Apache Arrow Rust 57.0.0 Release

Published

30 Oct 2025

By

The Apache Arrow PMC (pmc)

The Apache Arrow team is pleased to announce that the v57.0.0 release of Apache Arrow Rust is now available on crates.io (arrow and parquet) and as source download.

See the 57.0.0 changelog for a full list of changes.

New Features

Note: Arrow Rust hosts the development of the parquet crate, a high performance Rust implementation of Apache Parquet.

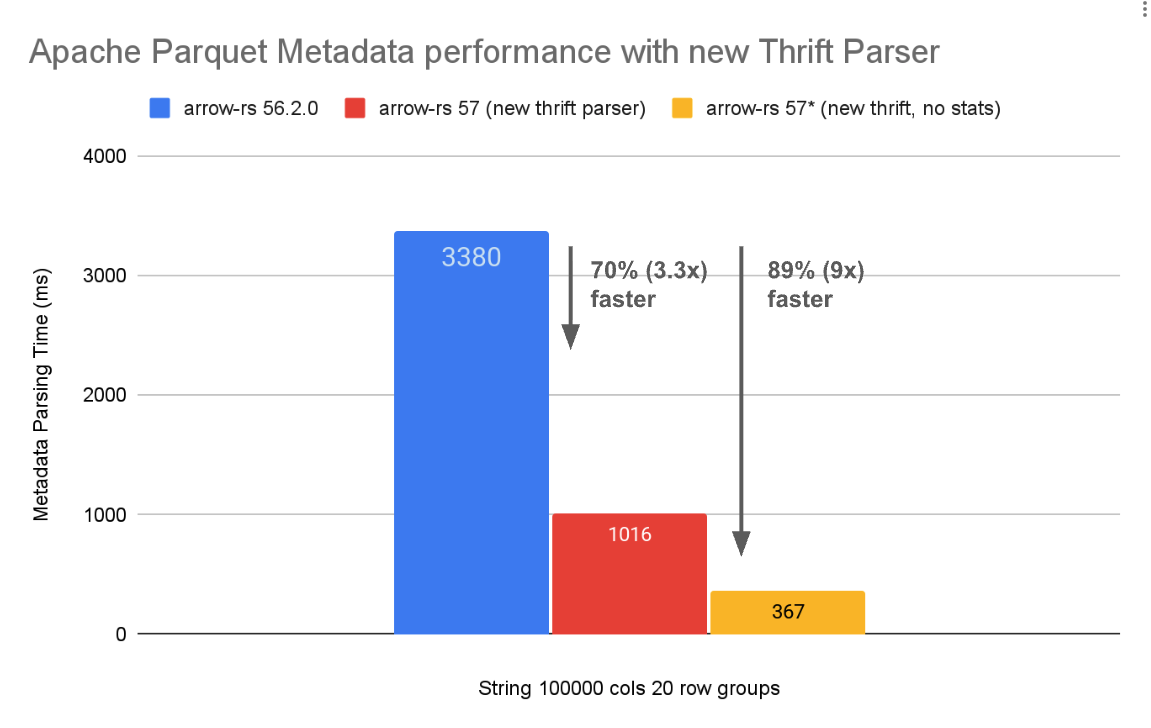

Performance: 4x Faster Parquet Metadata Parsing 🚀

Ed Seidl (@etseidl) and Jörn Horstmann (@jhorstmann) contributed a rewritten

thrift metadata parser for Parquet files which is almost 4x faster than the

previous parser based on the thrift crate. This is especially exciting for low

latency use cases and reading Parquet files with large amounts of metadata (e.g.

many row groups or columns).

See the blog post about the new Parquet metadata parser for more details.

Figure 1: Performance improvements of Apache Parquet metadata parsing between version 56.2.0 and 57.0.0.

New arrow-avro Crate

The 57.0.0 release introduces a new arrow-avro crate contributed by @jecsand838

and @nathaniel-d-ef that provides much more efficient conversion between

Apache Avro and Arrow RecordBatches, as well as broader feature support.

Previously, Arrow‑based systems that read or wrote Avro data

typically used the general‑purpose apache-avro crate. While mature and

feature‑complete, its row-oriented API does not support features such as

projection pushdown or vectorized execution. The new arrow-avro crate supports

these features efficiently by converting Avro data directly into Arrow's

columnar format.

See the blog post about adding arrow-avro for more details.

Figure 2: Architecture of the arrow-avro crate.

Parquet Variant Support 🧬

The Apache Parquet project recently added a new Variant type for

representing semi-structured data. The 57.0.0 release includes support for reading and

writing both normal and shredded Variant values to and from Parquet files. It

also includes parquet-variant, a complete library for working with Variant

values, VariantArray for working with arrays of Variant values in Apache

Arrow, computation kernels for converting to/from JSON and Arrow types,

extracting paths, and shredding values.

// Use the VariantArrayBuilder to build a VariantArray

let mut builder = VariantArrayBuilder::new(3);

builder.new_object().with_field("name", "Alice").finish(); // row 1: {"name": "Alice"}

builder.append_value("such wow"); // row 2: "such wow" (a string)

let array = builder.build();

// Since VariantArray is an ExtensionType, it needs to be converted

// to an ArrayRef and Field with the appropriate metadata

// before it can be written to a Parquet file

let field = array.field("data");

let array = ArrayRef::from(array);

// create a RecordBatch with the VariantArray

let schema = Schema::new(vec![field]);

let batch = RecordBatch::try_new(Arc::new(schema), vec![array])?;

// Now you can write the RecordBatch to the Parquet file, as normal

let file = std::fs::File::create("variant.parquet")?;

let mut writer = ArrowWriter::try_new(file, batch.schema(), None)?;

writer.write(&batch)?;

writer.close()?;

This support is being integrated into query engines, such as

@friendlymatthew's datafusion-variant crate to integrate into DataFusion

and delta-rs. While this support is still experimental, we believe the APIs

are mostly complete and do not expect major changes. Please consider trying

it out and providing feedback and improvements.

Thanks to the many contributors who made this possible, including:

- Ryan Johnson (@scovich), Congxian Qiu (@klion26), and Liam Bao (@liamzwbao) for completing the implementation

- Li Jiaying (@PinkCrow007), Aditya Bhatnagar (@carpecodeum), and Malthe Karbo (@mkarbo) for initiating the work

- Everyone else who has contributed, including @superserious-dev, @friendlymatthew, @micoo227, @Weijun-H, @harshmotw-db, @odysa, @viirya, @adriangb, @kosiew, @codephage2020, @ding-young, @mbrobbel, @petern48, @sdf-jkl, @abacef, and @mprammer.

See the ticket Variant type support in Parquet #6736 for more details

Parquet Geometry Support 🗺️

The 57.0.0 release also includes support for reading and writing Parquet Geometry

types, GEOMETRY and GEOGRAPHY, including GeospatialStatistics

contributed by Kyle Barron (@kylebarron), Dewey Dunnington (@paleolimbot),

Kaushik Srinivasan (@kaushiksrini), and Blake Orth (@BlakeOrth).

Please see the Implement Geometry and Geography type support in Parquet tracking ticket for more details.

Thanks to Our Contributors

$ git shortlog -sn 56.0.0..57.0.0

36 Matthijs Brobbel

20 Andrew Lamb

13 Ryan Johnson

11 Ed Seidl

10 Connor Sanders

8 Alex Huang

5 Emil Ernerfeldt

5 Liam Bao

5 Matthew Kim

4 nathaniel-d-ef

3 Raz Luvaton

3 albertlockett

3 dependabot[bot]

3 mwish

2 Ben Ye

2 Congxian Qiu

2 Dewey Dunnington

2 Kyle Barron

2 Lilian Maurel

2 Mark Nash

2 Nuno Faria

2 Pepijn Van Eeckhoudt

2 Tobias Schwarzinger

2 lichuang

1 Adam Gutglick

1 Adam Reeve

1 Alex Stephen

1 Chen Chongchen

1 Jack

1 Jeffrey Vo

1 Jörn Horstmann

1 Kaushik Srinivasan

1 Li Jiaying

1 Lin Yihai

1 Marco Neumann

1 Piotr Findeisen

1 Piotr Srebrny

1 Samuele Resca

1 Van De Bio

1 Yan Tingwang

1 ding-young

1 kosiew

1 张林伟