Connecting Relational Databases to the Apache Arrow World with turbodbc

Published

16 Jun 2017

By

Michael König (MathMagique)

Michael König is the lead developer of the turbodbc project

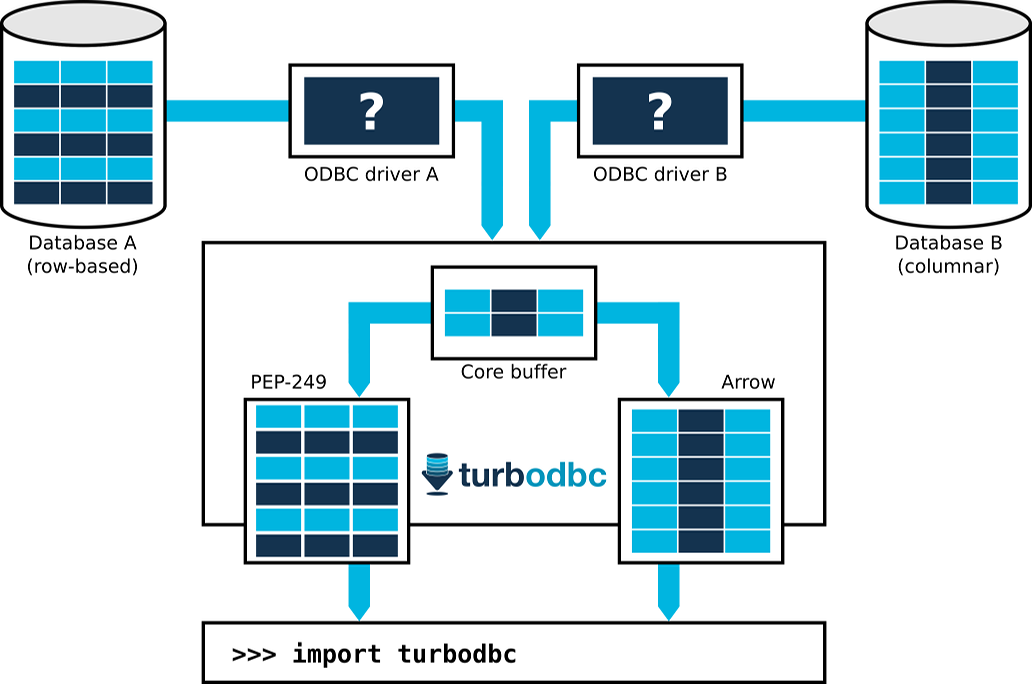

The Apache Arrow project set out to become the universal data layer for column-oriented data processing systems without incurring serialization costs or compromising on performance on a more general level. While relational databases still lag behind in Apache Arrow adoption, the Python database module turbodbc brings Apache Arrow support to these databases using a much older, more specialized data exchange layer: ODBC.

ODBC is a database interface that offers developers the option to transfer data either in row-wise or column-wise fashion. Previous Python ODBC modules typically use the row-wise approach, and often trade repeated database roundtrips for simplified buffer handling. This makes them less suited for data-intensive applications, particularly when interfacing with modern columnar analytical databases.

In contrast, turbodbc was designed to leverage columnar data processing from day one. Naturally, this implies using the columnar portion of the ODBC API. Equally important, however, is to find new ways of providing columnar data to Python users that exceed the capabilities of the row-wise API mandated by Python’s PEP 249. Turbodbc has adopted Apache Arrow for this very task with the recently released version 2.0.0:

>>> from turbodbc import connect

>>> connection = connect(dsn="My columnar database")

>>> cursor = connection.cursor()

>>> cursor.execute("SELECT some_integers, some_strings FROM my_table")

>>> cursor.fetchallarrow()

pyarrow.Table

some_integers: int64

some_strings: string

With this new addition, the data flow for a result set of a typical SELECT query is like this:

- The database prepares the result set and exposes it to the ODBC driver using either row-wise or column-wise storage.

- Turbodbc has the ODBC driver write chunks of the result set into columnar buffers.

- These buffers are exposed to turbodbc's Apache Arrow frontend. This frontend will create an Arrow table and fill in the buffered values.

- The previous steps are repeated until the entire result set is retrieved.

{:class="img-responsive" width="75%"}

{:class="img-responsive" width="75%"}

In practice, it is possible to achieve the following ideal situation: A 64-bit integer column is stored as one contiguous block of memory in a columnar database. A huge chunk of 64-bit integers is transferred over the network and the ODBC driver directly writes it to a turbodbc buffer of 64-bit integers. The Arrow frontend accumulates these values by copying the entire 64-bit buffer into a free portion of an Arrow table's 64-bit integer column.

Moving data from the database to an Arrow table and, thus, providing it to the Python user can be as simple as copying memory blocks around, megabytes equivalent to hundred thousands of rows at a time. The absence of serialization and conversion logic renders the process extremely efficient.

Once the data is stored in an Arrow table, Python users can continue to do some

actual work. They can convert it into a Pandas DataFrame for data analysis

(using a quick table.to_pandas()), pass it on to other data processing

systems such as Apache Spark or Apache Impala (incubating), or store

it in the Apache Parquet file format. This way, non-Python systems are

efficiently connected with relational databases.

In the future, turbodbc’s Arrow support will be extended to use more sophisticated features such as dictionary-encoded string fields. We also plan to pick smaller than 64-bit data types where possible. Last but not least, Arrow support will be extended to cover the reverse direction of data flow, so that Python users can quickly insert Arrow tables into relational databases.

If you would like to learn more about turbodbc, check out the GitHub project and the project documentation. If you want to learn more about how turbodbc implements the nitty-gritty details, check out parts one and two of the "Making of turbodbc" series at Blue Yonder's technology blog.